am 22.11.2023 - 09:23 Uhr

High availability, fault tolerance, security, and performance are crucial quality benchmarks in IT operations. In the dynamic world of IT, companies face the challenge of operating their data centers while adhering to compliance guidelines. Everyone knows it. But how do they do it?

Recent history has shown that even established cloud providers struggle with the technical challenges of operating a highly available data center: from OS updates to security vulnerabilities and hardware component failures. Outages seem to be part of daily business and should therefore be included in every risk analysis and infrastructure architecture design.

The question is how well companies are prepared for such situations and how effectively they can respond to them. Here, the holistic interaction of operational processes and highly available infrastructure is crucial.

MORE KNOWLEDGE WANTED?

On Tuesday, November 21, 2023, the companies ICONAG-Leittechnik, B-Unity, and the Infrontec Ingenieurgesellschaft hosted the online seminar "Monitoring as a Basis for Data Center High Availability and Energy Efficiency."

From 1:30 PM to 5:30 PM, the following topics were covered in various presentations:

Operating data centers within a regulatory framework, ensuring resilience and energy optimization Legally compliant infrastructure monitoring in data centers. What do the Building Energy Act and Energy Efficiency Act demand? Requirements for building automation in critical infrastructure data centers Particularly relevant building automation management functions and data interfaces for data center operations

The speakers possess expert knowledge and extensive experience due to their responsible roles, such as in a data center of a major German city, at the German Air Traffic Control, as well as in the planning, consulting, and implementation of data center infrastructure management. Participation is free of charge.

Often there is still a classic silo problem. The IT infrastructure falls under the responsibility of IT, while the supply infrastructure of the data center is the domain of facility management, and there is often a very different perception of the requirements. Relevant statistics from industry associations clearly show that the data center supply infrastructure is the primary cause of data center outages. It accounts for more than 50 percent.

Regulatory Framework Critical infrastructures also require data centers and IT to secure business and supply processes. Especially in the overarching societal context, the failure of critical infrastructure companies and facilities is a particular risk. The BSI Basic Protection provides the regulatory framework for the operation of these critical infrastructure facilities and sets requirements for high availability and thus also for the monitoring of critical infrastructure and data centers.

Here is an excerpt from §8a para. 1 of the BSIG (Federal Office for Information Security): "Operators of critical infrastructures are obliged to ... take appropriate organizational and technical measures to prevent disruptions to the availability, integrity, authenticity, and confidentiality of their information technology systems, components, or processes that are essential for the functionality of the critical infrastructures they operate. ..."

The power supply/electrical engineering inherently represents the most time-critical and qualitatively sensitive supply medium. The tolerance time of IT switch-mode power supplies often lies in the range of up to 20 milliseconds (ms). Cooling of the IT equipment, aside from high-performance water cooling systems, is tolerant to disturbances due to the storage capacities of the cooling medium ranging from seconds to minutes.

The EN50160 standard describes the requirements for voltage quality in low-voltage networks. Even small deviations from the standard range can cause switch-mode power supplies and hardware to fail.

The requirements for so-called environmental conditions in the data center are defined in EN50600-2-3 with reference to ASHRAE TC9.9. Contrary to the high sensitivity of the power supply, climatic fluctuations – including potential exceedances of temperature and humidity limits – do not directly lead to IT system failures. Especially since these parameter changes mostly occur in the range of seconds.

Building services, hardware, and so on The supply infrastructure is largely based on the infrastructure of technical building equipment (TBE), which, although partly used in higher quality in the data center environment, is usually not specialized technology. These are mechanical systems exposed to the elements and subject to natural wear and tear.

In contrast, IT hardware is operated as a component with relatively low wear and tear under optimized environmental parameters. Redundancy and fault tolerance are the design basis in the server world. The logic layer complements fault tolerance at the OS or application level. Furthermore, servers are replaced after a few years due to technology, performance, or efficiency/scalability reasons, and age- or wear-related failures rarely cause hardware malfunctions.

IT is easier and more flexible to manage in the logical and software-based world. Parameters are often the levers that influence interconnections between systems, while in the supply infrastructure, piping and cables cannot be easily reparameterized or isolated in case of failure. Thus, a small leak in an unfavorable pipe network can lead to failure if it does not allow for isolation without supply losses. Likewise, parallel circuit breakers in electrical engineering pose a clear conflict between VDE and availability in terms of redundancy.

Compared to IT, the supply infrastructure is more static and must conceptually provide fault tolerance when building the infrastructure.

Regulatory Framework Critical infrastructures also require data centers and IT to secure business and supply processes. Especially in the broader societal context, the failure of critical infrastructure companies and facilities poses a particular risk. The BSI Basic Protection forms the regulatory framework for the operation of these critical infrastructure facilities and sets requirements for high availability and thus also for the monitoring of critical infrastructure and data centers.

Here is an excerpt from §8a para. 1 of the BSIG (Federal Office for Information Security): "Operators of critical infrastructures are obliged to ... take appropriate organizational and technical measures to prevent disruptions to the availability, integrity, authenticity, and confidentiality of their information technology systems, components, or processes that are essential for the functionality of the critical infrastructures they operate. ..."

The EU directives NIS2 (Network and Information Security) and CER (Critical Entities Resilience), which will be transposed into German law by October 2024, aim to ensure the availability and resilience of infrastructures such as a data center. Additionally, the EnEfG (Energy Efficiency Act) or the Building Energy Act (GEG) also require monitoring in data centers as a solution for energy data acquisition and energy management.

The DIN EN 50600 still has potential. The data center standard currently provides few statements on how monitoring should be implemented. EN 50600-2-3 only names threshold values and refers to the monitoring of supply air temperatures. Especially with regard to the implementation of the EnEfG and KRITIS requirements, it is expected that the standard will fill this gap.

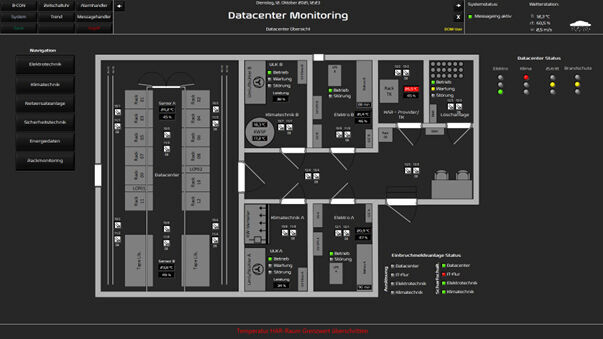

The significance and benefits of monitoring A comprehensive high-availability concept for data center infrastructure at the physical and logical levels can prevent failures through redundancy and autonomy in most cases. Monitoring not only detects faults and deviations from the desired state, but also, depending on the integration, offers the possibility of detecting fault developments early and deriving a need for action before they occur. Thus, intermittent errors or operating and operating points of systems in limit areas can be precursors to failures.

Effective monitoring provides stakeholders in data center operations with sufficient transparency. With a well-developed event and alarm management system, monitoring seamlessly integrates into data center operations and provides the added value of efficient and rapid situation assessment and fault clearance, as well as traceability through logging and measurement/trend data recording.

In addition, monitoring is a form of documentation that maps the data center in the form of a "Digital Twin". Energy data acquisition around the infrastructure is also part of monitoring and is subsequently required by the EnEfG.

Challenges in monitoring With increasing infrastructure complexity and hybrid architectures, the demands on the technical side are increasing. At the same time, IT departments are facing a shortage of skilled workers and must implement new security and compliance guidelines or catch up on the backlog of digitization projects in addition to daily tasks.

This often leads to the neglect of the expansion and maintenance of monitoring. However, once monitoring becomes outdated due to lack of maintenance, or worse, visualizes or reports incorrect messages/states, the acceptance and utility of the system decreases.

At the infrastructure level, due to its origins, one encounters a diverse array of protocols compared to IT, which offer integration of building services equipment into monitoring. While SNMP, IPMI, and REST APIs have established themselves as familiar standards to administrators in IT, in the building services equipment world, we find protocols such as BACnet, Modbus, KNX, M-Bus, Profibus, and several others.

Increasingly rare are proprietary interfaces and protocols from manufacturers that must also be integrated into a holistic and seamless monitoring solution. The task of the system integrator in the field of building automation is to connect these protocols directly or via gateways to the monitoring system.

Under these conditions, selecting the right monitoring tool is crucial. Open systems and interface concepts have proven to be flexible and sustainable.

A particular focus of integration should be on IT security. Some interfaces, due to historical and design reasons, do not offer IT security and, if used insecurely, can decrease availability due to sabotage potential. Secure protocols and interfaces such as BACnet/SC, KNX Secure, OPC UA, and MQTT are increasingly making their way into this space. (On this topic, Christian Wild, Managing Director of partner company ICONAG-Leittechnik in Idar-Oberstein, has already written an article: "Law and Common Sense IT Security and Availability in Building Automation in Data Centers").

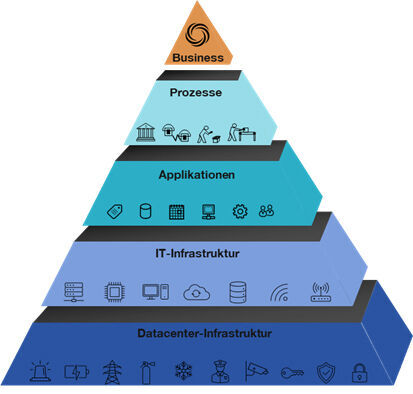

Levels of Monitoring In terms of securing business processes, monitoring extends beyond just the supply infrastructure. Each higher level depends on the level below it. Thus, the business process depends on this "stack" of infrastructure, hardware, software, and processes.

Since each level represents its own technical discipline, solutions available on the market typically cater to one or at most two of these levels as monitoring tools. Finding a tool that equally covers all areas will hardly be possible given the individually distinct infrastructures. Therefore, it is common to find several tools specialized in one or at most two of these levels.

Who gets what information? Depending on the hierarchy level, there are specialized monitoring tools. The challenge lies in providing relevant information for different user groups.

While technicians require detailed insights, managers rely on dashboards and KPIs. Therefore, in terms of role-based information provision, dashboard applications are suitable, as they can retrieve data from monitoring systems across different levels and present them in the context of securing business processes, often represented as traffic lights.

Cost, effort, and benefit Considering the costs of an IT outage within the framework of risk management, which are continuously rising due to the increasing digitization in companies, it is necessary to establish suitable measures for risk control and availability assurance. The efforts involved in integrating a monitoring tool are divided into licensing costs and system integration for commercial and supported solutions.

Licensing costs typically consist of a base price for the software and additional fees for modules containing features and interfaces, based on the number of data points/metrics to be monitored. Subscription models are also available, which can be scaled freely according to current needs and billed through monthly/yearly usage fees.

Furthermore, monitoring serves as a fundamental component for automation by providing sensory input data for comparison between desired and actual states. Whether in the field of data centers or IT infrastructure, automations are triggered by measured data and events. The proactive utilization of resources is based on historical trend data.

![]()

About the Author

Sebastian Mitscherlich is a Consultant specializing in Automation and Data Centers at B-Unity GmbH in Breuberg.

The young IT consulting firm specializes in Application Lifecycle Management and data center infrastructure. It provides consulting services to its clients for IT operations. In the field of Data Centers, B-Unity assists its clients in planning, designing, operating, and optimizing data centers according to the technical and procedural requirements of EN50600. One of the key focus areas is Data Center Monitoring, including system integration, IT security, and the establishment of management and control facilities.

He says, "Efficient monitoring is essential for highly available and complex IT landscapes. It not only helps prevent outages but also optimizes operational processes. The individual requirements of a company should be considered when selecting and implementing a monitoring system. Long-term success depends not only on implementation but also on continuous operation and regular adaptation of the monitoring system."

The ICONAG MBE (Management and Operating Device) software has been certified as B-AWS (BACnet Advanced Workstation) according to the latest BACnet Revision 19.

Powered by ModuleStudio 1.3.2